Make a VR Robot

The purpose for creating this robot was to have a platform for working with machine learning in the

physical world

rather than purely software. In connection with this, I will include future po...

Make a Robot controlled with EyesBot App

This post looks at using an iOS app communicating with a Blend Micro via Bluetooth to control hardware....

Simple Computer Vision with Javascript

Javascript can be used to get a matrix of pixels which can be used for computer vision. This post looks

at a very simple application, which can look for a color palette and infer the presence of...

Simple Obstacle Avoidance

This post discusses an easy algorithm for obstacle detection and avoidance. The techniques and

code discussed in this article are related to two former posts........

Robots and Cassandra

One of the behaviors that is being evaluated for inclusion in EyesBot Driver is mapping, which can

generate large amounts of robot sensor data in a bursty manner. This post will be about how to

...

Robotics: Controlling CIM Motors with Jaguar Motor Controllers using an Arduino

I couldn't finding an article which contained source and discussion for running a CIM motor from a Jaguar

controller using an Arduino, so if you are looking for this information, here it is. The ...

Building a Robot Controlled with an iPod - Easier Version

In an earlier post I described how to make a robot which is controlled using the free EyesBot Driver iOS app.

That robot required quite a bit of soldering and left the design of the robot chassis...

Simple Computer Vision with Web Services

I have a previous post about using web services from robots. This post is focused on using a web

service from EyesBot Driver robot controller app. The audience for this particular blog post is...

Robot Reacting to Visual Stimulus

One of the behaviors that make sentient beings appear sentient, is the ability to react to

the environment. In the case of reacting to an object, it's easy to mimic the behavior of a sentient

...

First Look at the MyRobots API

My last post was about using web services with robots and I recently read a post

in the Linkedin group "Robotics Guru" about the new MyRobots.com cloud monitoring

service from RobotShop (from wh...

Calling Web Services from a Robot

I'm starting to add autonomy to the robots that have been the main subject of this blog and one of the major

considerations of permitting autonomy is how to coordinate and modify the behavior of ...

Motion Detection and Tracking in C#

This is one of my infrequent technical posts, but related to one of my products.

I've written a few home/business security related apps that can monitor your residence or

business while you are ...

Building a Robot Controlled with an iPod - Part One

This post describes how to make a robot which is controlled using the EyesBot Driver iOS app (which should

be in the app store around June 3, if it passed the App Store review process without issu...

Building a Robot Controlled with an iPod - Part Two

This post continues the instructions about how to make a robot which is controlled using the EyesBot Driver iOS app........

Measure the Volume of Liquid in a Container using an Arduino and a Pressure Sensor

This is one of the occasional technical articles I'll be posting. The solution that is described

below stems from a problem that a few friends and I were discussing at lunch one day in Boulder, Col...

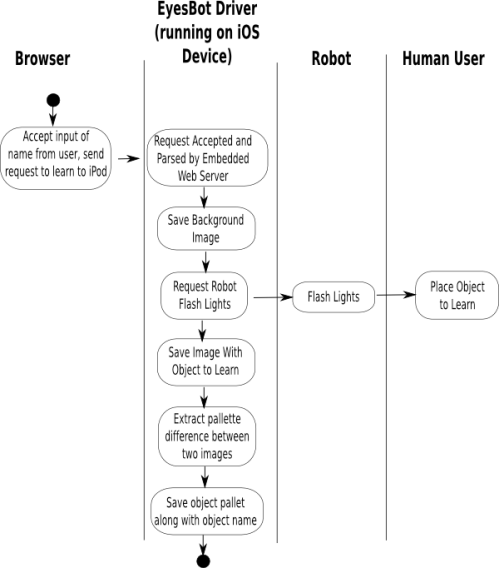

Applied Object Recognition

I looked at a few options for measuring distance for a small robot I'm working on, but it occurred to

me that I should be able to have the robot simple shine an LED on something, and, based on t...

Robotics: How to Use an LED to Determine Distances

I looked at a few options for measuring distance for a small robot I'm working on, but it occurred to

me that I should be able to have the robot simple shine an LED on something, and, based on t...

Communicating with an Arduino or Hardware from an iOS Device

I built a robot which uses an iPod as it's brain and the questions of how to interface

the iOS device to the hardware was probably the most difficult early decision in the design

process. There ...

Using NPN and PNP Transistors

I use transistors frequently to drive components that take more current than an Arduino can

supply, and infrequently to amplify signals. This post attempts to answer two really common

questions ...

Optimum Deployment of Vision Based Security Devices

Several of our software products, which run on iPhones, iPods, and iPads, use simple computer vision

based algorithms to help to alert the user of potential security issues at their home or office...

Object Recognition

This is related to the motion tracking blog post that I posted to my company's blog a few weeks ago, and builds on

the same source code. The problem I was trying to solve was how to teach an auto...

Applied Physical Computing

The EyesBot line of apps has some degree of autonomy and ability to influence and be influenced by its

environment - for example, EyesBot Watcher detects motion, tracks motion with it's "Eyes" and...

This turned out to be quite simple to implement, and permits easy communication

between the robot and user (see the video to see the learning in action). The code

for this choreography is:

This turned out to be quite simple to implement, and permits easy communication

between the robot and user (see the video to see the learning in action). The code

for this choreography is:

Computer vision

Computer vision

Artificial intelligence

Artificial intelligence

Effecting the physical world

Effecting the physical world